Topic outline

-

Overview

Teaching Staff Perspectives on the Role of Generative AI in Higher Education We surveyed more than 80 UniSA teaching academics in 2024, representing a range of disciplines and up to 30 years of teaching experience. Staff responded after more than a year of working with Generative AI tools in their teaching practice.Their insights highlight both opportunities and challenges in using AI to support student learning. Many educators observed that AI tools can assist with structuring writing, encouraging creativity, and providing early-stage feedback. However, concerns were also raised about the risk of widening performance gaps among students if appropriate guidance and support are not embedded in learning activities.

Across STEM, Health, Business, Education, and Creative disciplines, staff identified practical strategies for adapting course and assessment design to maintain academic integrity and student engagement. These included the use of in-person assessment, designing tasks that prioritise critical thinking and applied problem-solving, and explicitly teaching students how to use AI tools in ethical and purposeful ways.

Participant Profile

Of the 80 staff who responded to the survey, 76 identified their academic unit. The distribution reflects a broad cross-section of disciplines: 19 respondents were from STEM, 17 from Business, 15 from Allied Health & Human Performance, 13 from Education Futures, and 16 from Creative. A further 3 respondents were from Justice & Society, and 2 from Clinical & Health Sciences.

Results and Discussion

GenAI Experience of our staff

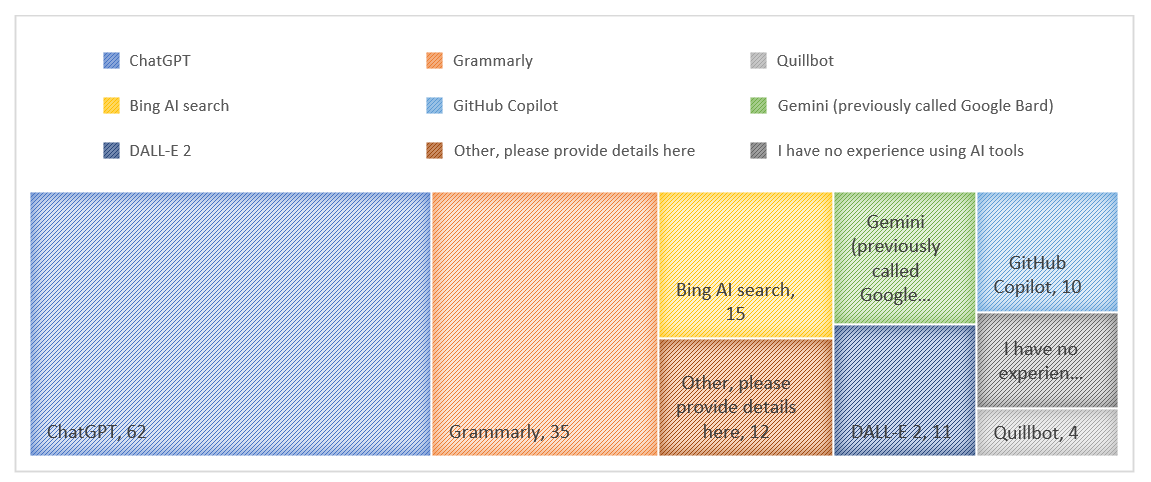

Participants were asked about their familiarity with AI Writing Tools (AIWTs) by selecting from a list of commonly used tools.

The total number of responses exceeded the number of participants, as many selected more than one tool. Most participants reported familiarity with ChatGPT, Grammarly, Bing, Gemini, and GitHub Copilot. Others identified additional tools, including Eduaide, Elicit, Wolfram, Microsoft Copilot (preview), Midjourney, Paletter.ai, Kaiber, Adobe Express, Wordtune, Arch-e, Stability AI, Stable Diffusion, Leonardo.ai, Craiyon.ai, Playground AI, Claude, Reka, Mistral, local LLMs (e.g. Llama), Fooocus (Stable Diffusion), Poe, Hemingway, Connected Papers, and Meta AI. Eight participants reported having no experience using AI tools.

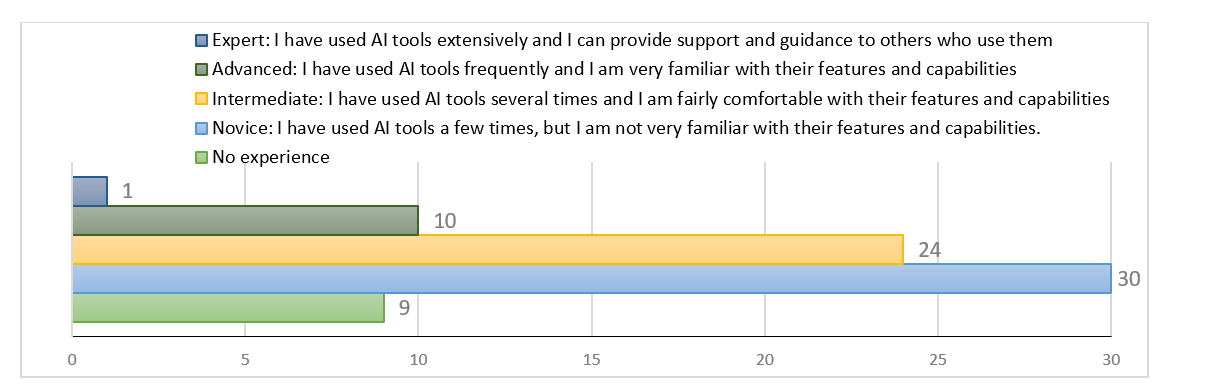

Figure 1 - Staff familiarity of AI writing tools in 2024Participants were asked to gauge their level of experience with AIWTs, categorising their expertise as: No experience, Novice, Intermediate, Advanced, or Expert.

Approximately 12% (9 participants) reported having no experience, consistent with the earlier finding that eight had not used any AI tools. Additionally, 41% identified as Novice, 32% as Intermediate, 14% as Advanced, and one participant categorised themselves as an Expert.

Figure 2 - Staff experience of using GenAI tools in 2024

Use of GenAI in course work

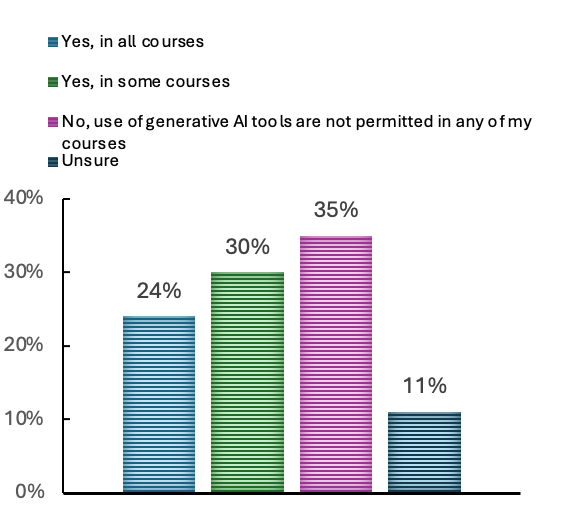

Participants were asked if they allowed students to use GenAI tools in their courses.

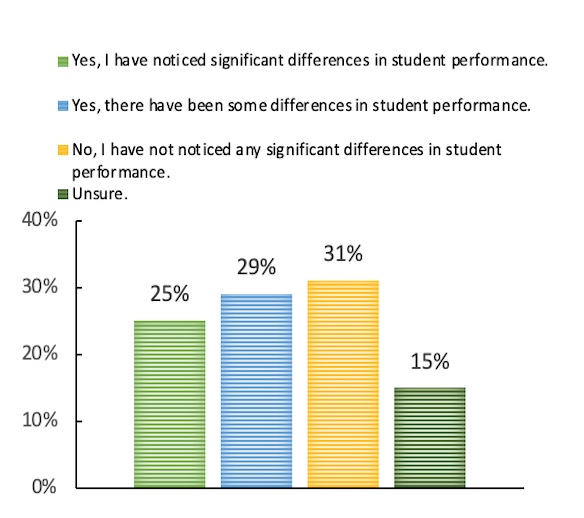

The majority of academic staff (54%) allowed students to use GenAI tools in their courses, as shown in Figure 3, and a high proportion (54%) observed a change in student performance (Figure 4). Academic staff recognised the need to build students' confidence in using GenAI as a learning tool. They reiterated that educators must provide clear guidelines, including examples of ethical and appropriate GenAI use within their course. Some respondents felt that marking rubrics needed adjustment to reflect GenAI integration.

Figure 3 - Academic Responses to Allowing GenAI Use in Their CoursesFigure 4 - Noticed Differences in Student Performance Since the Introduction of GenAI

Preferred Assessment Methods

Participants were asked to select their preferred assessment methods now with the easier access to GenAI.

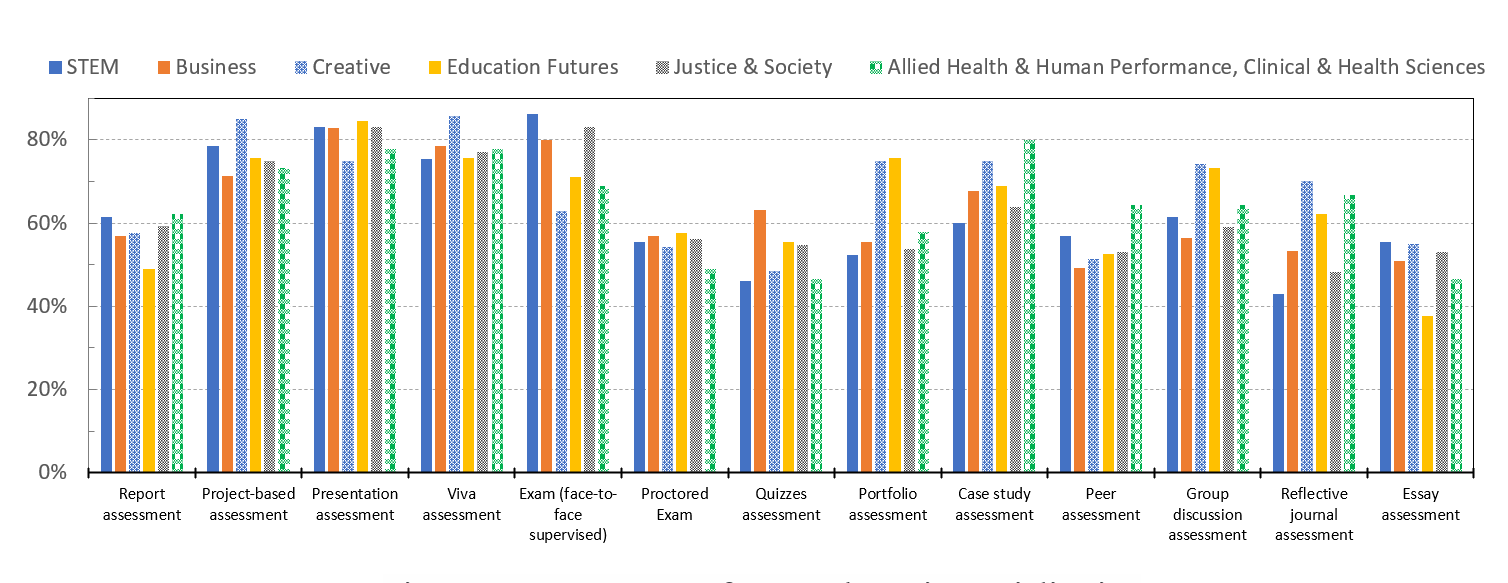

Most units showed nearly the same preferences, STEM unit prioritised face-to-face exams and presentations. Case studies were the top preference for the Allied Health & Human Performance and Clinical & Health Sciences units. Portfolio assessments and group discussion assessments were also on the preferred list for Creative and Future Education units.

Figure 5 - Assessment preferences by unit specialisation

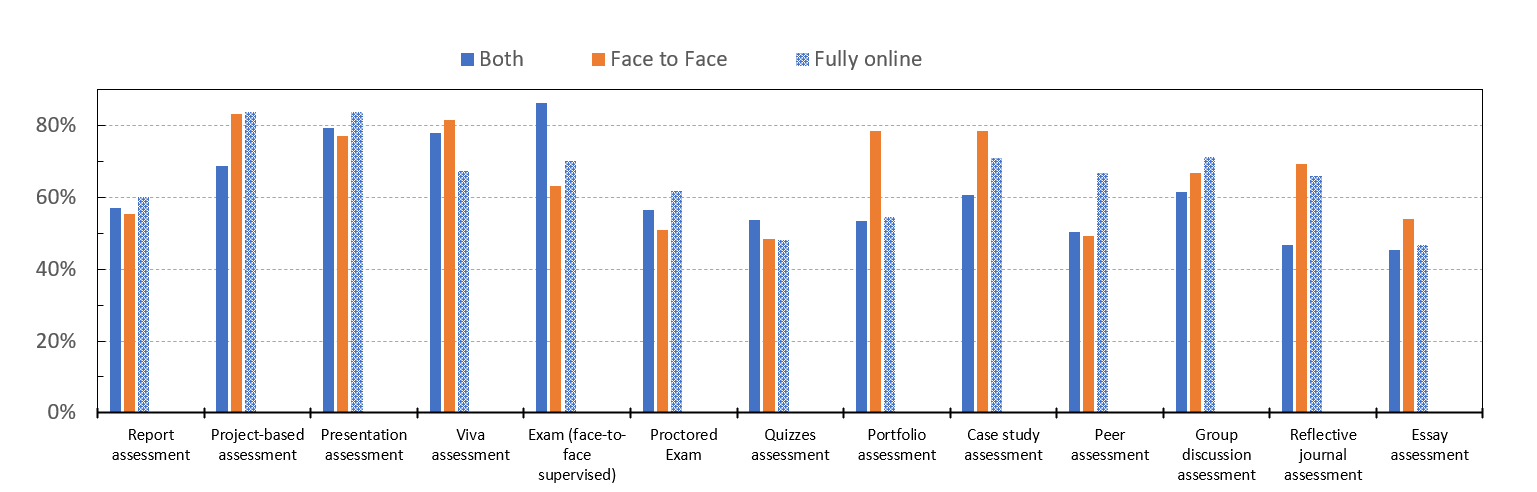

Project-based assessments and presentations are favored in both online and face-to-face modes. Remarkably, in face-to-face mode, project-based assessments scored the highest, suggesting that direct interaction amongst students may limit the challenges of AIWTs. However, presentations are equally preferred in both online and face-to-face modes. While face-to-face supervised exams are preferred by staff who are engaging in both teaching modes face to face and online modes, reflective journals and group discussions are more favored in online settings, possibly because these formats align well with the digital platforms.

Figure 6 - Assessment preferences by mode of delivery

References

Asikainen, H., & Gijbels, D. (2017). Do students develop towards more deep approaches to learning during studies? A systematic review on the development of students’ deep and surface approaches to learning in higher education. Educational Psychology Review, 29(2), 205-234.

Nikolic, S., Daniel, S., Haque, R., Belkina, M., Hassan, G. M., Grundy, S.,& Sandison, C. (2023). ChatGPT versus engineering education assessment: a multidisciplinary and multi-institutional benchmarking and analysis of this generative artificial intelligence tool to investigate assessment integrity. European Journal of Engineering Education, 48(4), 559-614.

Ramsden, P. (1997). The context of learning in academic departments. The experience of learning, 2, 198-216.

Struyven, K., Dochy, F., & Janssens, S. (2005). Students’ perceptions about evaluation and assessment in higher education: A review. Assessment & evaluation in higher education, 30(4), 325-341.

SummaryComments from UniSA Staff on GenAI

You may wish to refer to the staff comments below to help inspire your thinking about how GenAI could be incorporated into your course design.

-

-

195.0 KB PDF document

-

-

-