Topic outline

-

Overview

Academic dishonesty has always existed in higher education. While most students act ethically, a small number will always attempt to cheat. Traditionally, plagiarism-detection tools have been used to support accountability and help validate student work—not simply to “catch” misconduct, but to reinforce fairness and responsibility. The integration of Generative AI (GenAI) has made this challenge more complex. GenAI is now built into platforms widely available to students making detection of its use difficult.

Academic Integrity Officers (AIOs) play a vital role in this changing environment. Their work is not just reactive but proactive:

- supporting academics in developing robust assessment strategies,

- guiding fair investigations, and

- ensuring procedural rigour in suspected misconduct cases.

Participant Profile

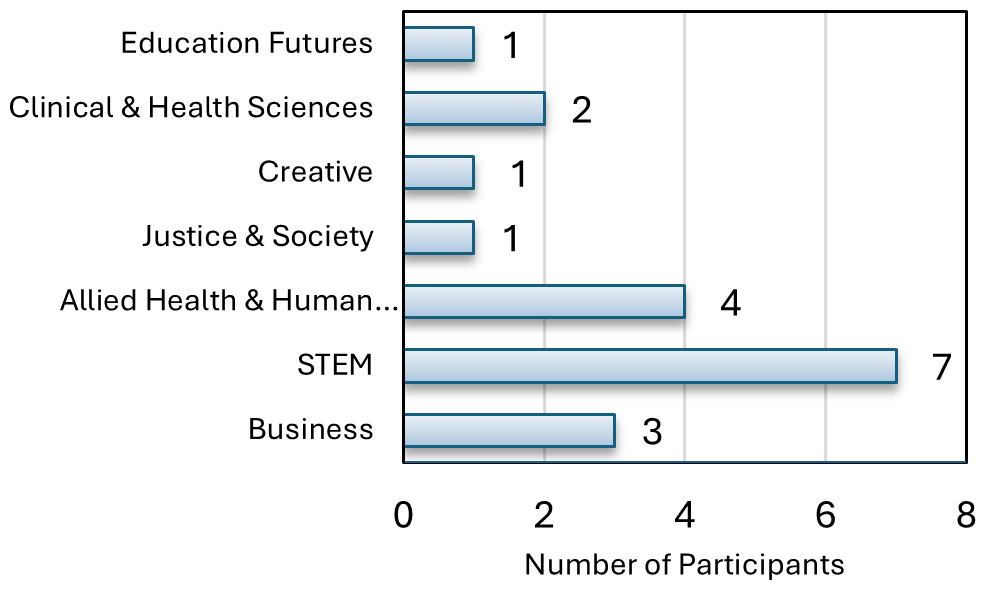

Figure 1 below shows the distribution and strength of representation of participants in the study. 19 AOIs particpated in the study across 7 Units. 7 were from UniSA STEM (39%), 4 from UniSA Allied Health & Human Performance (22%), 3 from UniSA Business (17%), and 2 from UniSA Clinical & Health Sciences (11%), with 1 participant each from UniSA Justice & Society (6%), UniSA Education Futures (6%), and UniSA Creative (6%).

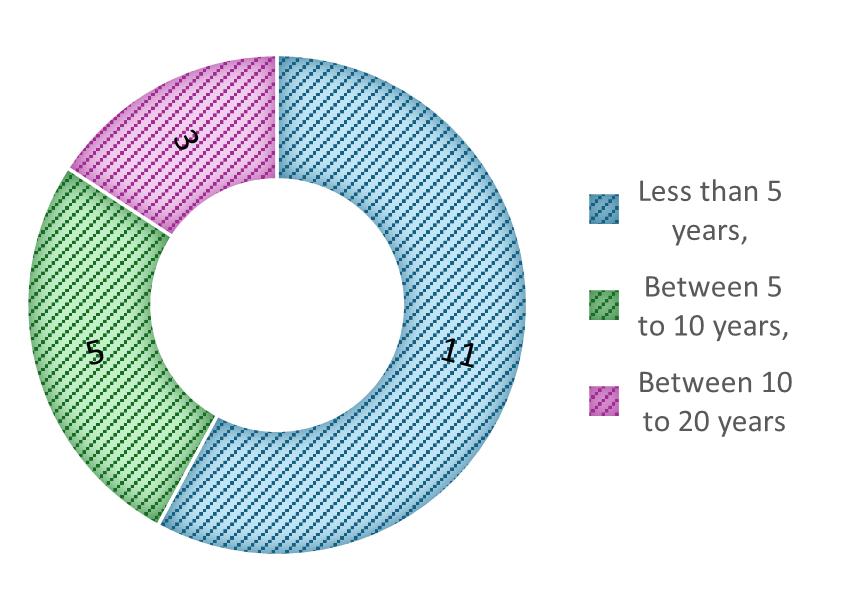

In their role as AIOs, particpants possessed varying levels of experience. Three had more than 10 years of academic integrity experience (16%), five between 5–10 years (26%), and eleven with less than 5 years (58%) as shown in Figure 2.

Figure 1 - Participants across academic units

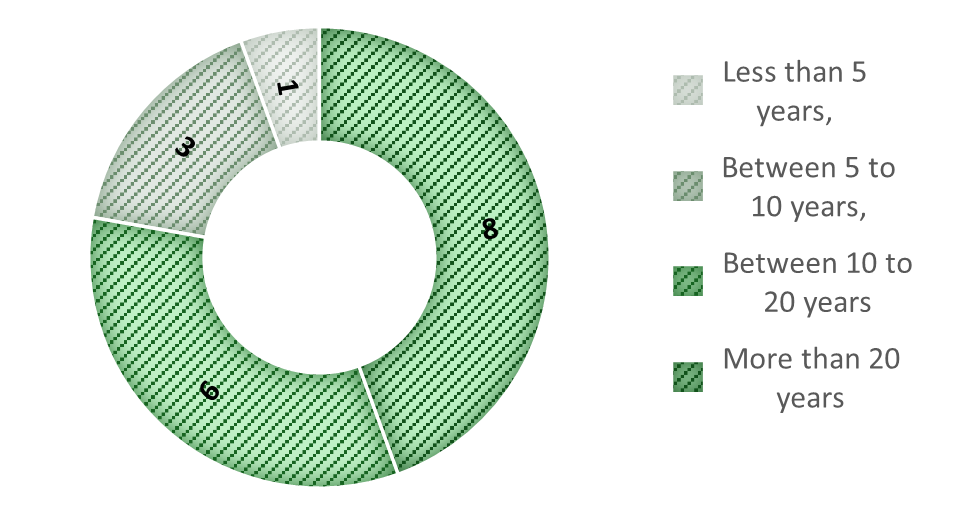

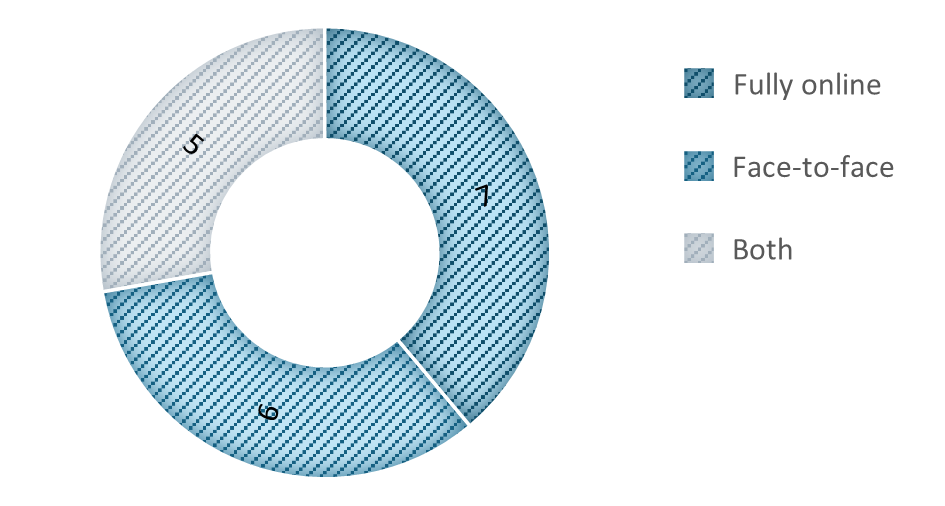

Figure 2 - Participants' experience as academic integrity officers (AOIs)Most participants were highly experienced academic staff, with 8 having more than 20 years of academic experience (44%), 6 between 10–20 years (33%), 3 between 5–10 years (17%), and 1 with less than 5 years (6%) as shown in Figure 3.Regarding their teaching mode, 7 teach fully online (30%), 6 teach face-to-face only (33%), and 5 teach in both online and face-to face-modes (28%) as illustrated in Figure 4.

Figure 3 - Participants' teaching experienceFigure 4 - Teaching mode of participantsResults and Discussion

Famiarity with GenAI Tools

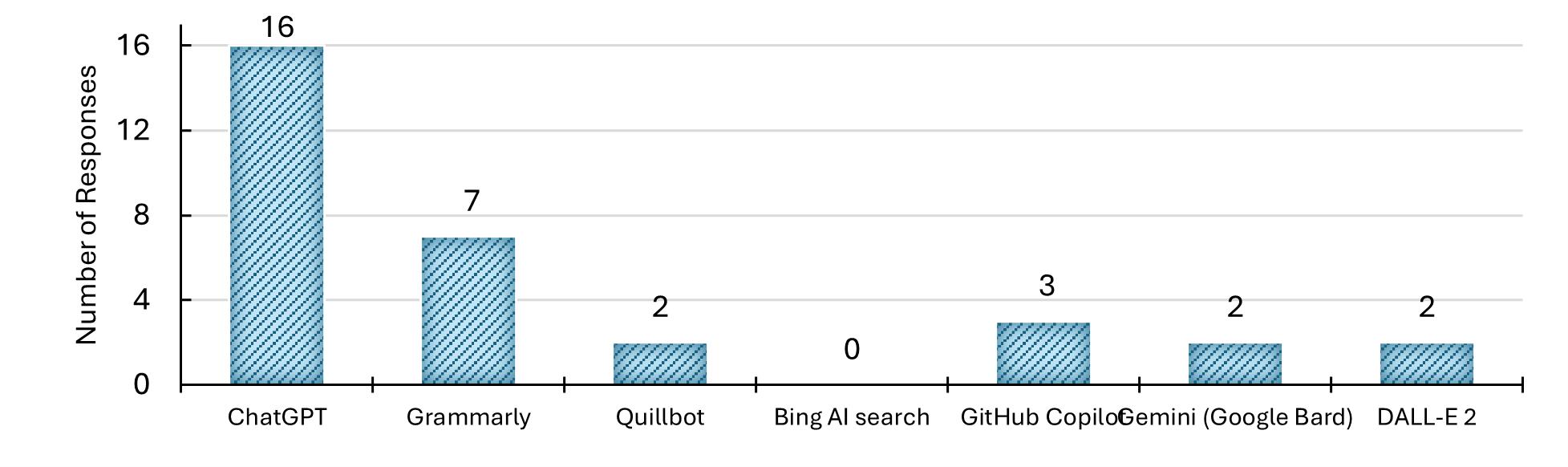

AOIs were asked to indicate their familiarity (in terms of experience) with GenAI tools by selecting from a list of tools most used at the time of the survey. Also, there was an option for respondents to include other tools if not listed.

As shown in Figure 5 below, AOIs demonstrated familiarity with all the listed tools except for Bing GenAI search. ChatGPT (94%) and Grammarly (41%) were the most prominently recognised, consistent with the awareness levels reported in other HEIs (Yusuf et al., 2024). Grammarly’s inclusion in the GenAI landscape is due to its use of GenAI capabilities for content enhancement, including sentence restructuring, tone modification, and text expansion, extending beyond its primary function as a traditional editing tool. They were able to select multiple tools, which explains why the total number of tools selected exceeded the number of participants in the survey. Notably, not all 19 respondents utilised ChatGPT, despite its extensive popularity since its release in November 2022. This finding could be attributed to some negative sentiments among academic staff, particularly fear and anger stemming from ChatGPT’s role in higher education, concerns about academic misconduct, and broader ethical issues (Mamo et al., 2024). Participants also had the option to list other GenAI tools they used. Youdao and Copilot were the other tools listed.

Figure 5 - Distribution of GenAI tools usage amongst participant AOIs

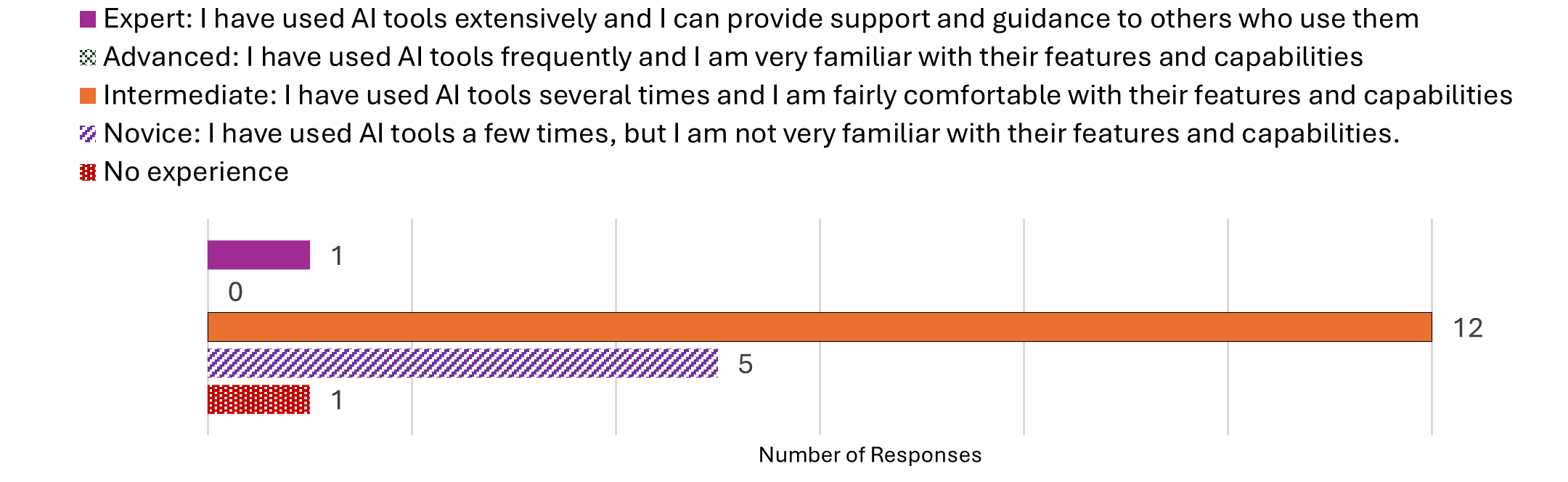

Expertise in using GenAI toolsAOIs, were then asked to categorise their expertise in using GenAI tools as being No experience, Novice, Intermediate, Advanced, or Expert.

The results in Figure 6 below showed that most AOI participants reported having intermediate level (63%) or novice level experience (26%), and none claimed to be experts, while one participant reported having no experience (5%). This observation suggests that academics in higher education are cautiously adopting GenAI tools despite earlier anxiety and hesitation. It further confirms that the potential benefits of these tools are gradually being recognised. However, technical knowledge and usability gaps still affect their general acceptance, particularly among AOI participants who identified as "Novice" and "No experience." Also, the absence of any “Advanced” users and only one “Expert” user, could imply that academic staff are yet to be adequately equipped by their employers with the requisite resources and training to achieve proficiency in using GenAI tools. This may also reflect ongoing ethical concerns and lack of clear policy framework on GenAI adoption in higher education.

Figure 6 - AIOs’ experience levels with GenAI tool

Perspectives on GenAI’s Impact on Assessment Security and Academic Integrity

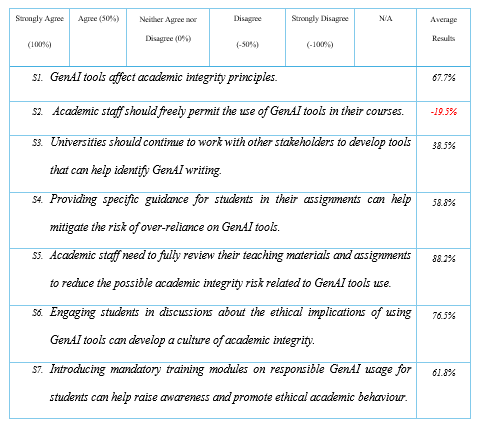

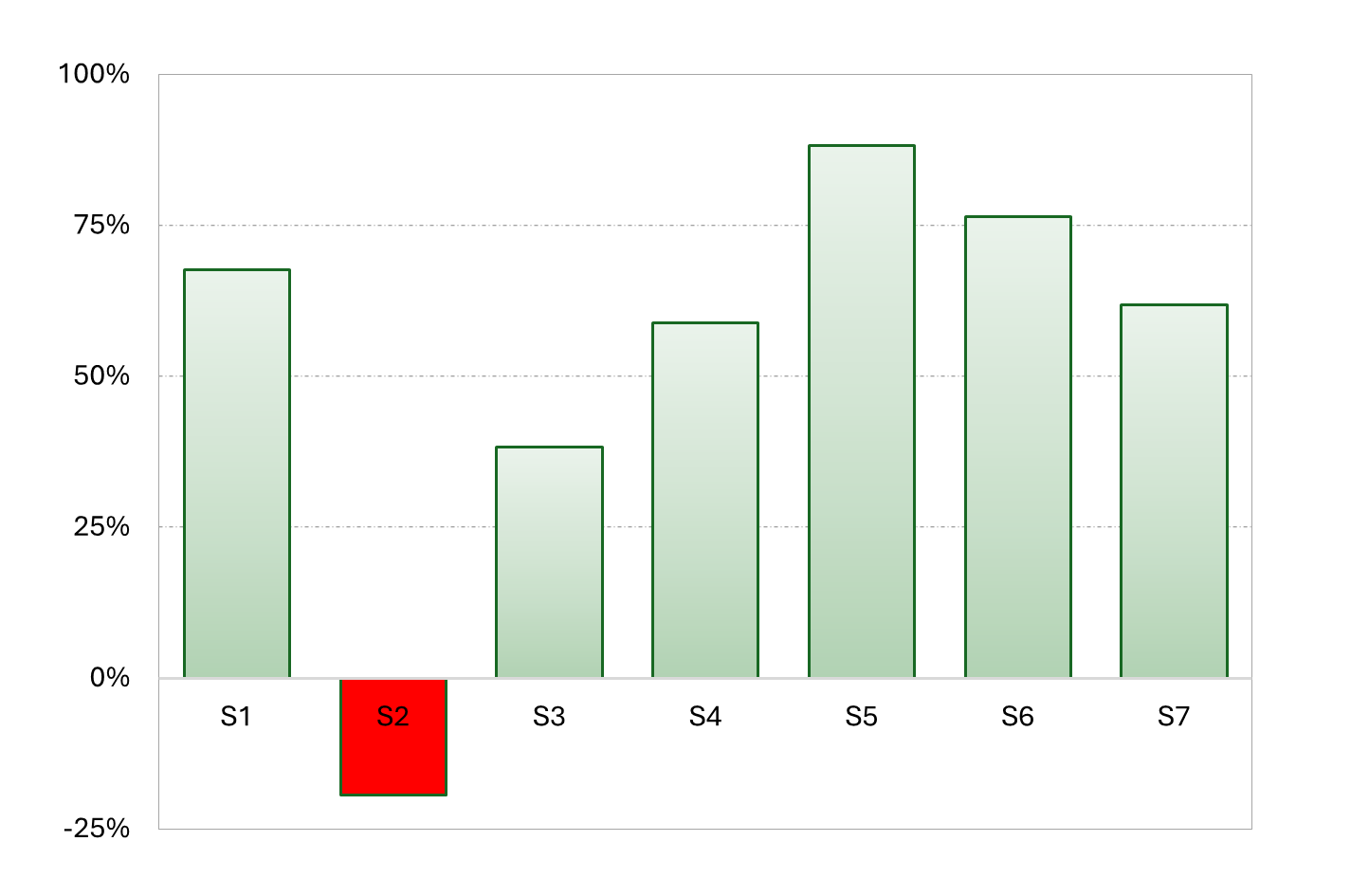

AIOs were asked about their perspectives on GenAI’s Impact on Assessment Security and Academic Integrity.

As shown in Table 1 below, AIOs responded to seven statements (S1–S7) from a 5-point Likert scale, rating their level of agreement from ‘Strongly Agree’ to ‘Strongly Disagree’. For clarity, numerical values were assigned to each stated opinion: Strongly Agree (100%), Agree (50%), Neither Agree nor Disagree (0%), Disagree (-50%), and Strongly Disagree (-100%). Table 1 and Figure 7 summarise the average agreement scores for each statement.

Table 1 - AOIs views on assessment security and integrityFigure 7 - Average agreement scores for survey questions (S1-S7)

Specific Responses

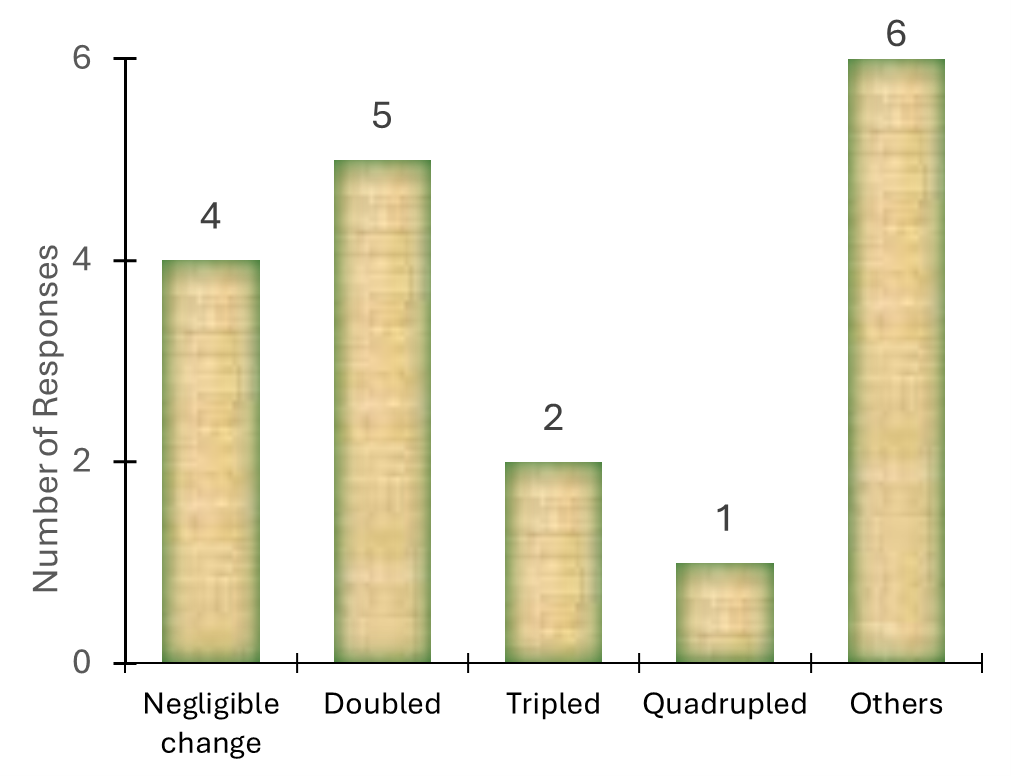

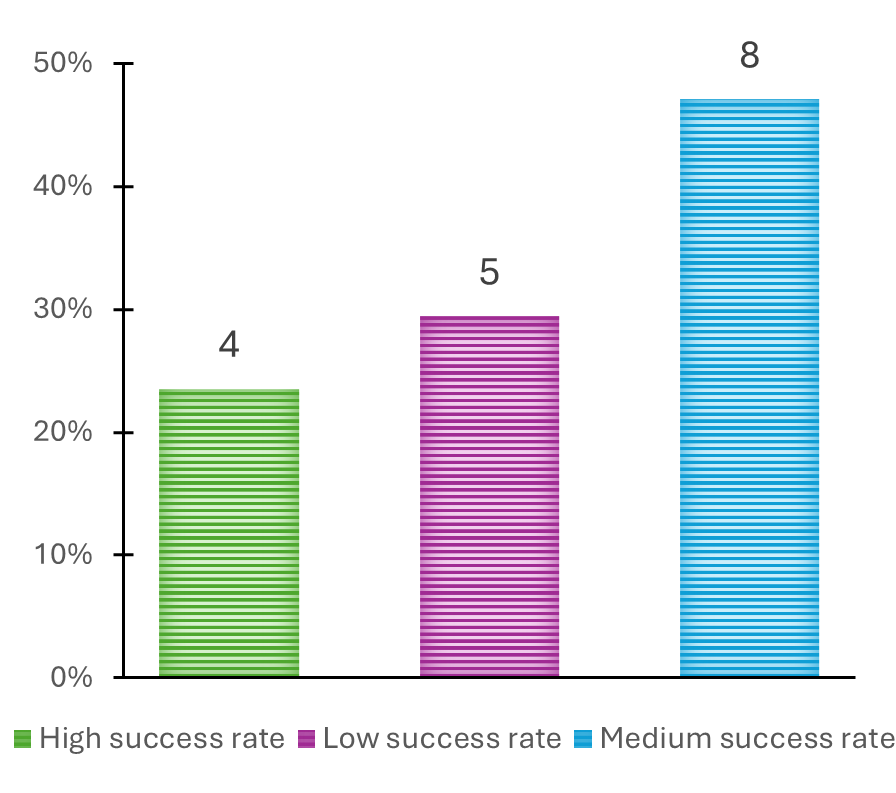

The AOI participants’ specific responses on the key areas related to GenAI and academic integrity shown in Table 1 are shown below. These include perspectives on safeguarding ethical standards and assessment security (S5, S1), the need for clear assignment-specific guidance (S4, S5), the role and permissibility of GenAI detection tools in courses (S3, S2) and insights into case numbers and challenges arising from GenAI-related academic misconduct in Figure 8.

Figure 8 - Rise in academic misconduct cases since emergence of GenAI toolsFigure 9 - AOIs success rates for GenAI related academic misconduct investigations

Key ChallengesSubsequently, AIOs were also asked to provide insights into the key challenges they faced in confirming misconduct versus cases where no conclusive determination could be made. Their responses highlighted four major challenges shown below:

Investigating GenAI-related Academic Misconduct – AIOs ApproachAIOs employ a range of approaches and strategies to investigate suspected cases of GenAI use in student assessments. Their methods focus on gathering evidence, engaging students in discussions, and identifying inconsistencies in writing style and citation use. A summary of key strategies used across different disciplines is provided below:

Institutional Guidance to Student’s on Ethical GenAI Use

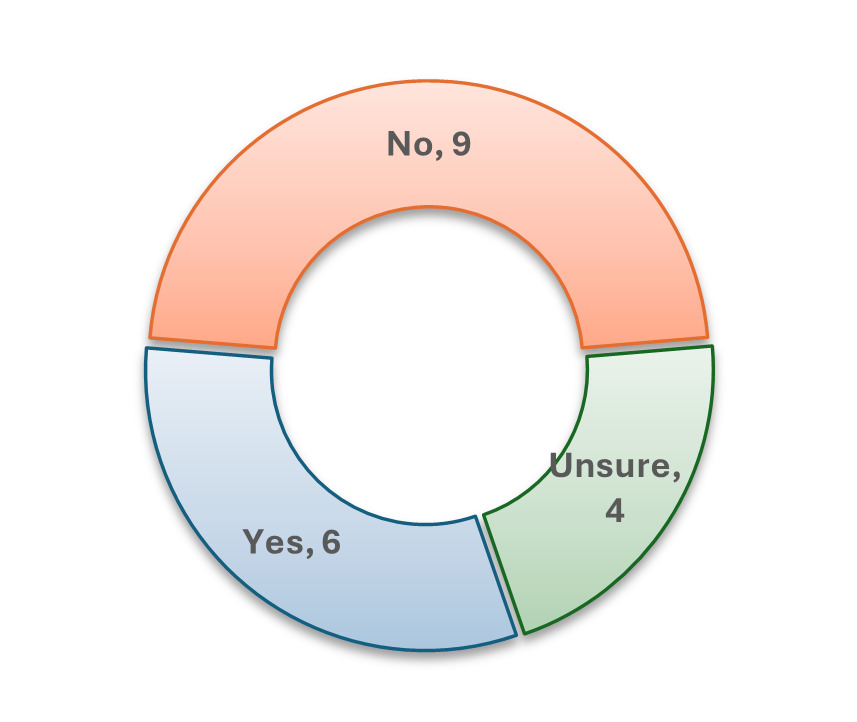

The AIOs were asked, "Do you think students are adequately informed by the university or teaching staff (course coordinators, course facilitators, or tutors) about the ethical and permissible use of GenAI tools?"

The majority of participants answered "No," followed by "Unsure" as shown in Figure 10 opposite. This suggests that, from the AIOs’ perspective, students are not receiving sufficient guidance on how to work with GenAI tools ethically. This results in lack of clarity and could contribute to unintentional academic misconduct or confusion regarding GenAI use. These views align with findings from previous studies (Chan & Hu, 2023; Gonsalves, 2024; Laflamme & Bruneault, 2025; Luo, 2024).

Subsequently, those who answered “No” or “Unsure” were asked to suggest the information that the studentsneed from the university or teaching staff.

A summary of responses is given below:

Figure 10 - AOIs perceptions of GenAI ethics awareness among students- Offering training sessions and resources for staff on designing secure and authentic assessments while incorporating GenAI literacy into teaching and learning – (UniSA STEM).

- Providing discipline-specific examples and resources outlining both appropriate and inappropriate GenAI use cases – (UniSA STEM).

- Ensuring clear guidance on how GenAI can be used appropriately, for example, as an idea generator or supplementary tool, and specifying whether GenAI-produced content should be included in submissions – (UniSA Education Futures).

- Incorporating motivational messaging to emphasise the value of authentic learning, such as through videos that contrast the job readiness and career prospects of an authentic learner with those who are overly reliant on GenAI – (UniSA STEM).

- Establishing a standardised university-wide approach to GenAI use, ensuring consistency across courses and faculties, and explicitly stating policies on permissible and impermissible GenAI use – (UniSA Allied Health & Human Performance).

- Ensuring course websites contain clear GenAI guidelines to make information easily accessible to students – (UniSA Business, UniSA STEM).

- Implementing a dedicated and compulsory first-year course on writing, researching, referencing, and ethical GenAI use – (UniSA Allied Health & Human Performance).

Preparing AOIs for the GenAI Era

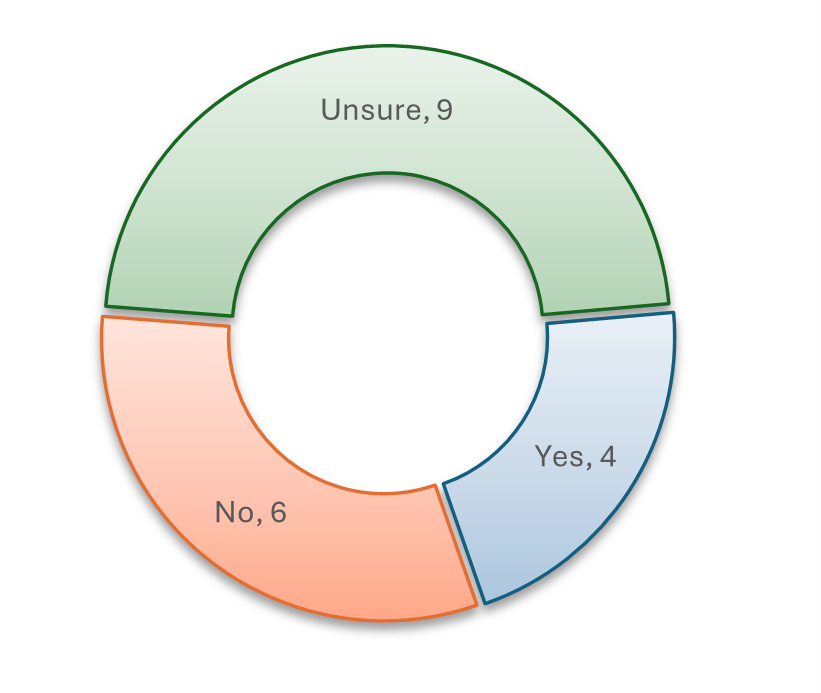

To gauge the level of preparedness and technical competence of AIOs in a GenAI era, they were asked, "Do you think that AIOs are adequately trained and equipped to investigate cases of academic misconduct involving GenAI tools?"

The responses revealed that only 21% of participants felt confident that they were sufficiently trained and prepared for investigating GenAI-related misconduct cases, while the remaining 79% were either unsure or clearly stated that they were unprepared, as shown in Figure 11. This finding, suggests that AIOs' limited expert-level competency in GenAI tools likely contributes to their inadequate preparedness for investigating GenAI-related academic misconduct.

Those who responded "No" or "Unsure" were then asked, "What resources and training do you think AIOs need to effectively investigate cases of academic misconduct involving GenAI tools?"

A summary of responses is given below:

Figure 11 - Confidence gauge of AOIs for GenAI-related academic misconduct investigation- GenAI Detection Training: AIOs require structured training on GenAI detection, including how to interpret GenAI-produced content and identify trends in student work.

- Continuous Professional Development: Regular ½-day workshops and structured training programs to share issues and strategies among AIOs.

- Practical Investigation Support: Step-by-step investigation procedures, case studies, examples, and FAQs to help AIOs assess potential misconduct cases.

- Emotional and Procedural Support: Training on handling emotionally-charged interactions with students, particularly as GenAI-related misconduct cases become more contentious.

- Policy Clarity: Stronger policies on the use of GenAI tools, including clearer positions on tools like Grammarly and paraphrasing software.

- Academic Unit-Level Consistency: Addressing inconsistencies in GenAI-related misconduct handling across different academic units, ensuring equitable access to training and resources.

Implications for Practice

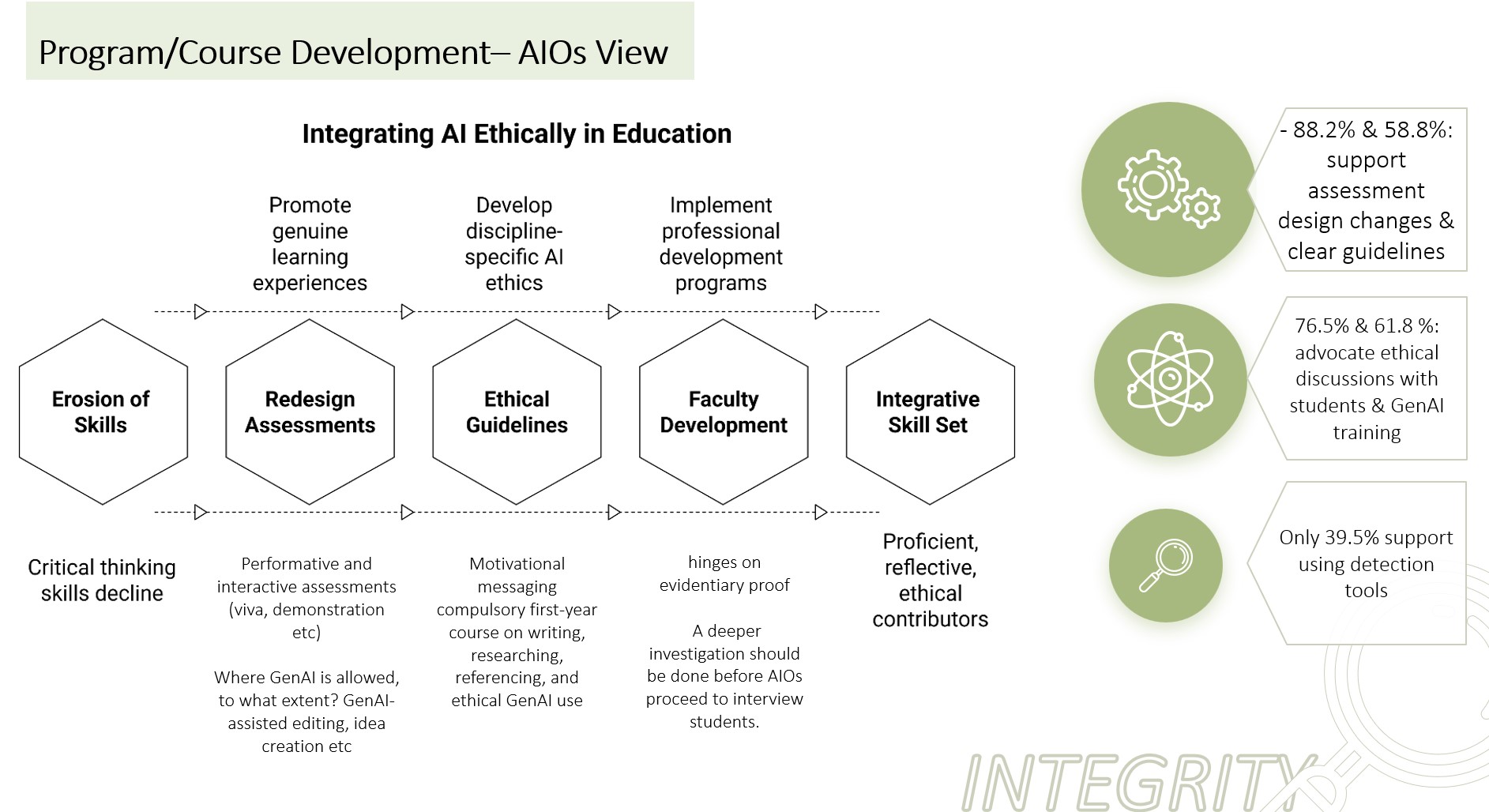

As GenAI tools become increasingly integrated into educational environments, modifying and redesigning assessments to ensure authentic learning detection represents the most viable approach to addressing academic misconduct challenges.

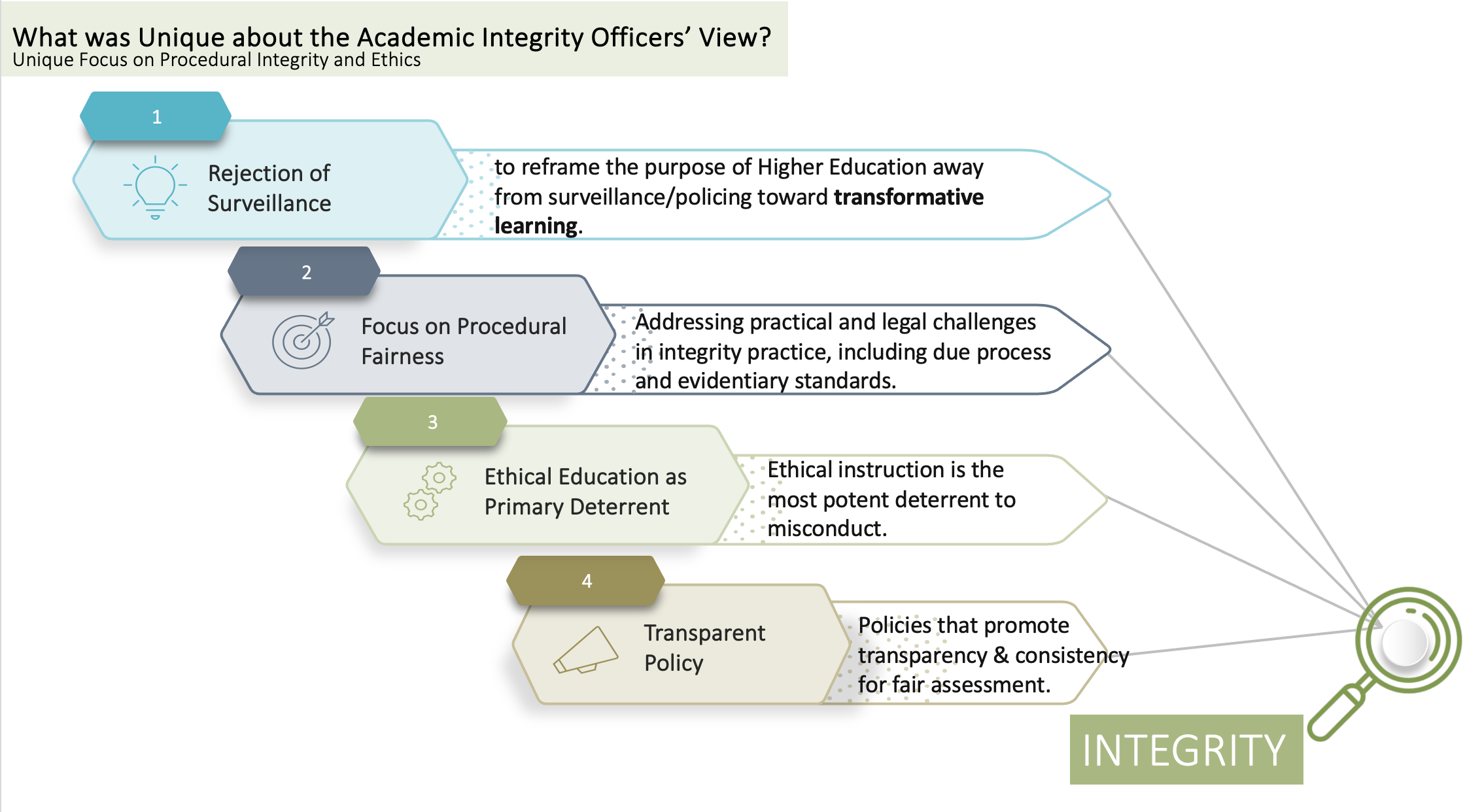

Eighteen (18) months following ChatGPT's release, when this study was concluded, AIOs recognise that comprehensive implementation of this strategy serves dual purposes: clarifying appropriate versus inappropriate GenAI use and ensuring misconduct investigations achieve procedural fairness. When teaching staff prioritise assessment redesign and ethical discussions while AIOs develop greater GenAI technical competency, four key elements of procedural fairness emerge in misconduct investigations.

Markedly, this strategy highlights teaching staff's crucial role in developing sustainable academic misconduct solutions. Assessment modification that establishes clear student expectations inherently promotes academic integrity, reinforcing the shared responsibility construct among all stakeholders.

This assessment-focused approach offers a sustainable pathway for mitigating academic misconduct through learning assurance while facilitating procedural fairness in investigations. By leveraging teaching staff expertise in assessment design and AIOs' growing GenAI competency, institutions can achieve fair, consistent outcomes that educate rather than merely punish students, ultimately strengthening academic integrity culture in the GenAI era.

Summary