Special | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | ALL

C |

|---|

CWE-1256 | ||

|---|---|---|

CWE-1260 | ||

|---|---|---|

This is a student-friendly explanation of the hardware weakness CWE-1260. Not ready for 2023. | ||

CWE-1300 | ||||

|---|---|---|---|---|

This is a student-friendly explanation of the hardware weakness CWE-1300 “Improper Protection of Physical Side Channels”, which is susceptible to A hardware product with this weakness does not contain sufficient protection mechanisms to prevent physical side channels from exposing sensitive information due to patterns in physically observable phenomena such as variations in power consumption, electromagnetic emissions (EME), or acoustic emissions. Example 1

Google’s Titan Security Key is a FIDO universal 2nd-factor (U2F) hardware device that became available since July 2018. Unfortunately, the security key is susceptible to side-channel attacks observing the local electromagnetic radiations of its secure element — an NXP A700x chip which is now discontinued — during an ECDSA signing operation [RLMI21]. The side-channel attack can clone the secret key in a Titan Security Key. Watch the USENIX Security ’21 presentation on the side-channel attack: More examples of side-channel attacks are available here. 🛡 General mitigation

The standard countermeasures are those that apply to CWE-1300 “Improper Protection of Physical Side Channels” and its parent weakness CWE-203 “Observable Discrepancy”. CWE-1300:

CWE-203:

References

| ||||

CWE-1384 | ||||

|---|---|---|---|---|

This is a student-friendly explanation of the hardware weakness CWE-1384 “Improper Handling of Physical or Environmental Conditions”, where a hardware product does not properly handle unexpected physical or environmental conditions that occur naturally or are artificially induced. This weakness CWE-1384 and the weakness CWE-1300 can be seen as two sides of a coin: while the latter is about leakage of sensitive information, the former is about injection of malicious signals. Example 1

The GhostTouch attack [WMY+22] generates electromagnetic interference (EMI) signals on the scan-driving-based capacitive touchscreen of a smartphone, which result in “ghostly” touches on the touchscreen; see Fig. 1. The EMI signals can be generated, for example, using ChipSHOUTER. These ghostly touches enable the attacker to actuate unauthorised taps and swipes on the victim’s touchscreen. Watch the authors’ presentation at USENIX Security ’22: PCspooF is another example of an attack exploiting weakness CWE-1384. 🛡 General mitigation

Product specification should include expectations for how the product will perform when it exceeds physical and environmental boundary conditions, e.g., by shutting down. Where possible, include independent components that can detect excess environmental conditions and are capable of shutting down the product. Where possible, use shielding or other materials that can increase the adversary’s workload and reduce the likelihood of being able to successfully trigger a security-related failure. References

| ||||

CWE-787 | |||

|---|---|---|---|

This is a student-friendly explanation of the software weakness CWE-787 “Out-of-bounds write”, where the vulnerable entity writes data past the end, or before the beginning, of the intended buffer. This and CWE-125 are two sides of the same coin. This can be caused by incorrect pointer arithmetic (see Example 1), accessing invalid pointers due to incomplete initialisation or memory release (see Example 2), etc. Example 1

In the adjacent C code, char pointer Reminder: In a POSIX environment, a C program can be compiled and linked into an executable using command Example 2

In the adjacent C code, after the memory allocated to Typically, the weakness CWE-787 can result in corruption of data, a crash, or code execution. 🛡 General mitigation

A long list of mitigation measures exist, so only a few are mentioned here:

Read about more measures here. Example 3

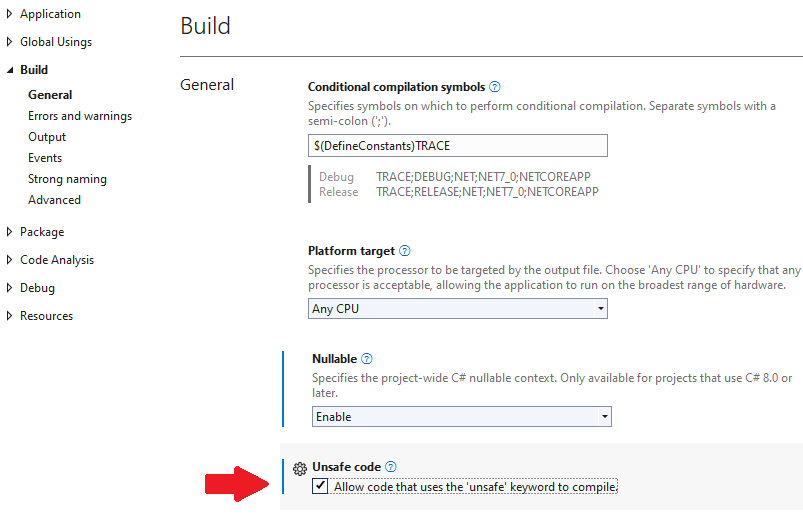

Using the Enabling compilation of unsafe code in Visual Studio as per Fig. 1, the code above can be compiled. Once compiled and run, the code above will not trigger any runtime error, unless the line writing Question: What runtime error would the | |||

CWE-79 | ||||

|---|---|---|---|---|

This is a student-friendly explanation of the software weakness CWE-79 “Improper Neutralization of Input During Web Page Generation ('Cross-site Scripting')”, which is susceptible to

This weakness exists when user-controllable input is not neutralised or is incorrectly neutralised before it is placed in output that is used as a web page that is served to other users. In general, cross-site scripting (XSS) vulnerabilities occur when:

Once the malicious script is injected, a variety of attacks are achievable, e.g.,

The attacks above can usually be launched without alerting the victim. Even with careful users, URL encoding or Unicode can be used to obfuscate web requests, to make the requests look less suspicious. Watch an introduction to XSS on LinkedIn Learning: Understanding cross-site scripting from Security Testing: Vulnerability Management with Nessus by Mike Chapple Watch a demonstration of XSS on LinkedIn Learning: Cross-site scripting attacks from Web Security: Same-Origin Policies by Sasha Vodnik Example 1

The vulnerability CVE-2022-20916 caused the web-based management interface of Cisco IoT Control Center to allow an unauthenticated, remote attacker to conduct an XSS attack against a user of the interface. The vulnerability was due to absence of proper validation of user-supplied input. 🛡 General mitigation

A long list of mitigation measures exist, so only a few are mentioned here:

Read about more measures here and also consult the OWASP Cross Site Scripting Prevention Cheat Sheet. References

| ||||

CWE-917 | ||||

|---|---|---|---|---|

This is a student-friendly explanation of the software weakness CWE-917 “Improper Neutralization of Special Elements used in an Expression Language Statement ('Expression Language Injection')”. The vulnerable entity constructs all or part of an expression language (EL) statement in a framework such as Jakarta Server Pages (JSP, formerly JavaServer Pages) using externally-influenced input from an upstream component, but it does not neutralise or it incorrectly neutralises special elements that could modify the intended EL statement before it is executed.

Example 1

The infamous vulnerability Log4Shell (CVE-2021-44228) that occupied headlines for months in 2022 cannot be a better example. Watch an explanation of Log4Shell on YouTube: 🛡 General mitigation

Avoid adding user-controlled data into an expression interpreter. If user-controlled data must be added to an expression interpreter, one or more of the following should be performed:

By default, disable the processing of EL expressions. In JSP, set the attribute References

| ||||

Cyber Kill Chain | ||||

|---|---|---|---|---|

The Cyber Kill Chain® framework/model was developed by Lockheed Martin as part of their Intelligence Driven Defense® model for identification and prevention of cyber intrusions. The model identifies what an adversary must complete in order to achieve its objectives. The seven steps of the Cyber Kill Chain sheds light on an adversary’s tactics, techniques and procedures (TTP): Watch a quick overview of the Cyber Kill Chain on LinkedIn Learning: Overview of the cyber kill chain from Ethical Hacking with JavaScript by Emmanuel Henri Example 1: Modelling Stuxnet with the Cyber Kill Chain

Stuxnet (W32.Stuxnet in Symantec’s naming scheme) was discovered in 2010, with some components being used as early as November 2008 [FMC11]. Stuxnet is a large and complex piece of malware that targets industrial control systems, leveraging multiple zero-day exploits, an advanced Windows rootkit, complex process injection and hooking code, network infection routines, peer-to-peer updates, and a command and control interface [FMC11]. Watch a brief discussion of modelling Stuxnet with the Cyber Kill Chain: Stuxnet and the kill chain from Practical Cybersecurity for IT Professionals by Malcolm Shore ⚠ Contrary to what the video above claims, Stuxnet does have a command and control routine/interface [FMC11]. References

| ||||

D |

|---|

Data flow analysis | ||||||

|---|---|---|---|---|---|---|

This continues from discussion of application security testing. Data flow analysis is a static analysis technique for calculating facts of interest at each program point, based on the control flow graph representation of the program [vJ11, p. 1254]. A canonical data flow analysis is reaching definitions analysis [NNH99, Sec. 2.1.2]. For example, if statement is Reaching definitions analysis determines for each statement that uses variable Example 1 [vJ11, pp. 1254-1255]

In the example below, the definition

x at line 1 reaches line 2, but does not reach beyond line 3 because x is assigned on line 3.

More formally, data flow analysis can be expressed in terms of lattice theory, where facts about a program are modelled as vertices in a lattice. The lattice meet operator determines how two sets of facts are combined. Given an analysis where the lattice meet operator is well-defined and the lattice is of finite height, a data flow analysis is guaranteed to terminate and converge to an answer for each statement in the program using a simple iterative algorithm. A typical application of data flow analysis to security is to determine whether a particular program variable is derived from user input (tainted) or not (untainted). Given an initial set of variables initialised by user input, data flow analysis can determine (typically an over approximation of) all variables in the program that are derived from user data. References

| ||||||

Delay-tolerant networking (DTN) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

In a mobile ad hoc network (MANET, see Definition 1), nodes move around causing connections to form and break over time. Definition 1: Mobile ad hoc network [PBB+17]

A wireless network that allows easy connection establishment between mobile wireless client devices in the same physical area without the use of an infrastructure device, such as an access point or a base station. Due to mobility, a node can sometimes find itself devoid of network neighbours. In this case, the node 1️⃣ stores the messages en route to their destination (which is not the node itself), and 2️⃣ when it finds a route of the destination of the messages, forwards the messages to the next node on the route. This networking paradigm is called store-and-forward. A delay-tolerant networking (DTN) architecture [CBH+07] is a store-and-forward communications architecture in which source nodes send DTN bundles through a network to destination nodes. In a DTN architecture, nodes use the Bundle Protocol (BP) to deliver data across multiple links to the destination nodes. Watch short animation from NASA: Watch detailed lecture from NASA: References

| ||||||||||||