Special | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | ALL

M |

|---|

Meltdown attacks | ||||||

|---|---|---|---|---|---|---|

Out-of-order execution is a prevalent performance feature of modern processors for reducing latencies of busy execution units, e.g., a memory fetch unit waiting for data from memory: instead of stalling execution, a processor skips ahead and executes subsequent instructions [LSG+18]. See Fig. 1. Fig. 1: If an executed instruction causes an exception that diverts the control flow to an exception handler, the subsequent instruction must not be executed [LSG+18, Figure 3]. Due to out-of-order execution, the subsequent instructions may already have been partially executed, but not retired. However, architectural effects of the execution are discarded. Although the instructions executed out of order do not have any visible architectural effect on the registers or memory, they have microarchitectural side effects [LSG+18, Sec. 3].

Meltdown consists of two building blocks [LSG+18, Sec. 4], as illustrated in Fig. 2:

Operation-wise, Meltdown consists of 3 steps [LSG+18, Sec. 5.1]:

Fig. 2: The Meltdown attack uses exception handling or suppression, e.g., TSX (disabled by default since 2021), to run a series of transient instructions [LSG+18, Figure 5]. The covert channel consists of 1️⃣ transient instructions obtaining a (persistent) secret value and changing the microarchitectural state of the processor based on this secret value; 2️⃣ FLUSH+RELOAD reading the microarchitectural state, making it architectural and recovering the secret value. Watch the presentation given by one of the discoverers of Meltdown attacks at the 27th USENIX Security Symposium: Since FLUSH+RELOAD was mentioned, watch the original presentation at USENIX Security ’14: More information on the Meltdown and Spectre website. References

| ||||||

MITRE ATLAS | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

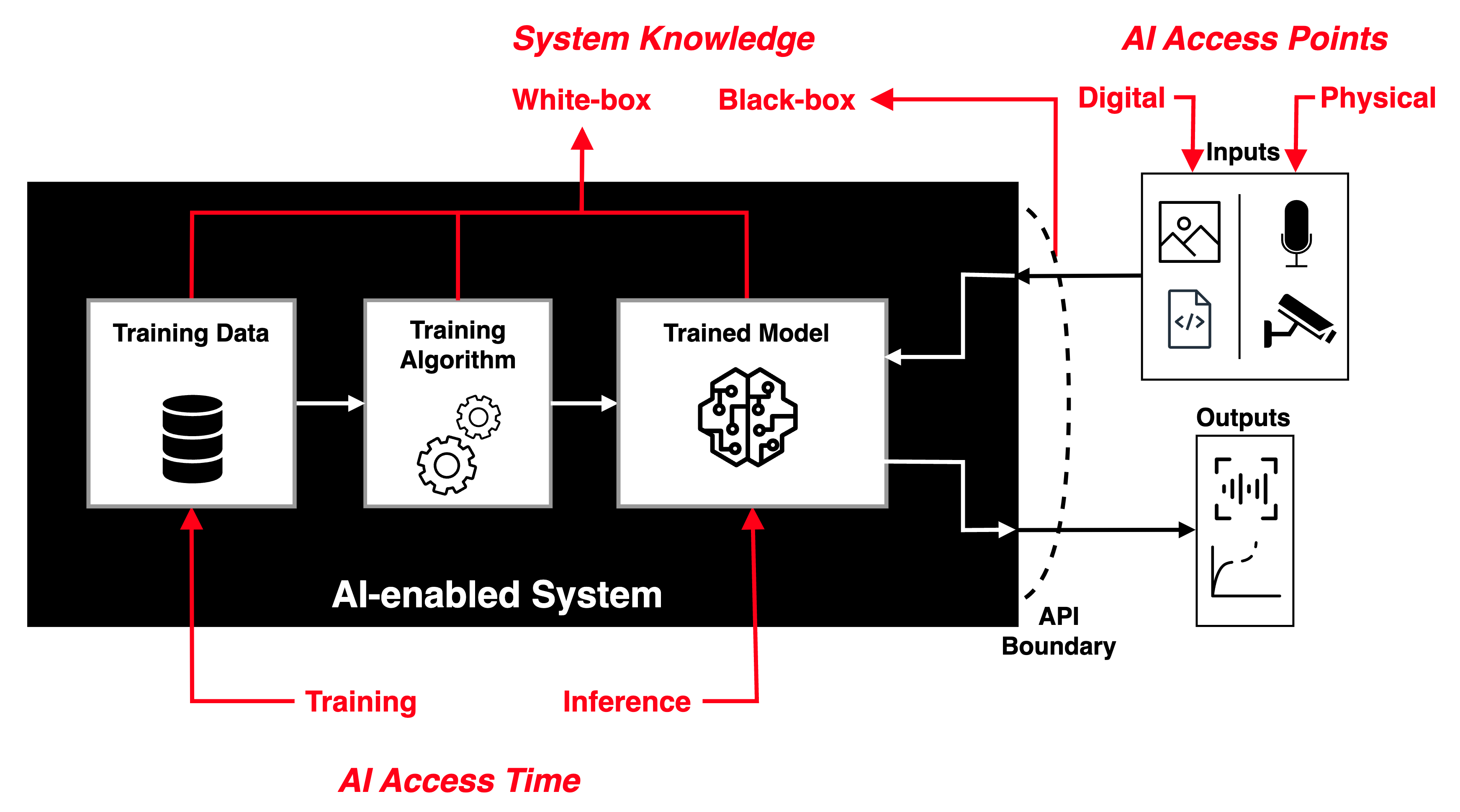

The field of adversarial machine learning (AML) is concerned with the study of attacks on machine learning (ML) algorithms and the design of robust ML algorithms to defend against these attacks [TBH+19, Sec. 1]. ML systems (and by extension AI systems) can fail in many ways, some more obvious than the others. AML is not about ML systems failing when they make wrong inferences; it is about ML systems being tricked into making wrong inferences. Consider three basic attack scenarios on ML systems: Black-box evasion attack: Consider the most common deployment scenario in Fig. 1, where an ML model is deployed as an API endpoint.

White-box evasion attack: Consider the scenario in Fig. 1, where an ML model exists on a smartphone or an IoT edge node, which an adversary has access to.

Poisoning attacks: Consider the scenario in Fig. 1, where an adversary has control over the training data, process and hence the model.

Watch introduction to evasion attacks (informally called “perturbation attacks”) on LinkedIn Learning: Perturbation attacks and AUPs from Security Risks in AI and Machine Learning: Categorizing Attacks and Failure Modes by Diana Kelley Watch introduction to poisoning attacks on LinkedIn Learning: Poisoning attacks from Security Risks in AI and Machine Learning: Categorizing Attacks and Failure Modes by Diana Kelley In response to the threats of AML, in 2020, MITRE and Microsoft, released the Adversarial ML Threat Matrix in collaboration with Bosch, IBM, NVIDIA, Airbus, University of Toronto, etc. Subsequently, in 2021, more organisations joined MITRE and Microsoft to release Version 2.0, and renamed the matrix to MITRE ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems). MITRE ATLAS is a knowledge base — modelled after MITRE ATT&CK — of adversary tactics, techniques, and case studies for ML systems based on real-world observations, demonstrations from ML red teams and security groups, and the state of the possible from academic research. Watch MITRE’s presentation: References

| ||||||||||

MITRE ATT&CK | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

MITRE ATT&CK® is a knowledge base of adversary tactics (capturing “why”) and techniques (capturing “how”) based on real-world observations. There are three versions [SAM+20]: 1️⃣ Enterprise (first published in 2015), 2️⃣ Mobile (first published in 2017), and 3️⃣ Industrial Control System (ICS, first published in 2020). Fig. 1 below shows the fourteen MITRE ATT&CK Enterprise tactics:

The Mobile tactics and ICS tactics are summarised below. Note a tactic in the Mobile context is not the same as the identically named tactic in the ICS context.

Among the tools that support the ATT&CK framework is MITRE CALDERA™ (source code on GitHub).

A (blurry) demo is available on YouTube: A complementary model to ATT&CK called PRE-ATT&CK was published in 2017 to focus on “left of exploit” behavior [SAM+20]:

ATT&CK is not meant to be exhaustive, because that is the role of MITRE Common Weakness Enumeration (CWE™) and MITRE Common Attack Pattern Enumeration and Classification (CAPEC™) [SAM+20].

References

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

MITRE CAPEC | |||

|---|---|---|---|

MITRE’s Common Attack Pattern Enumeration and Classification (CAPEC™) effort provides a publicly available catalogue of common attack patterns to help users understand how adversaries exploit weaknesses in applications and other cyber-enabled capabilities. Attack patterns are descriptions of the common attributes and approaches employed by adversaries to exploit known weaknesses in cyber-enabled capabilities.

As of writing, CAPEC stands at version 3.9 and contains 559 attack patterns. For example, CAPEC-98 is phishing: Definition 1: Phishing

A social engineering technique where an attacker masquerades as a legitimate entity with which the victim might interact (e.g., do business) in order to prompt the user to reveal some confidential information (typically authentication credentials) that can later be used by an attacker. CAPEC-98 can be mapped to CWE-451 “User Interface (UI) Misrepresentation of Critical Information”. | |||

MITRE D3FEND | ||||

|---|---|---|---|---|

MITRE D3FEND is a knowledge base — more precisely a knowledge graph — of cybersecurity countermeasures/techniques, created with the primary goal of helping standardise the vocabulary used to describe defensive cybersecurity functions/technologies.

The D3FEND knowledge graph was designed to map MITRE ATT&CK techniques (or sub-techniques) through digital artefacts to defensive techniques; see Fig. 1.

Operationally speaking, the D3FEND knowledge graph allows looking up of defence techniques against specific MITRE ATT&CK techniques.

Watch an overview of the D3FEND knowledge graph from MITRE on YouTube: References

| ||||

MITRE Engage | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

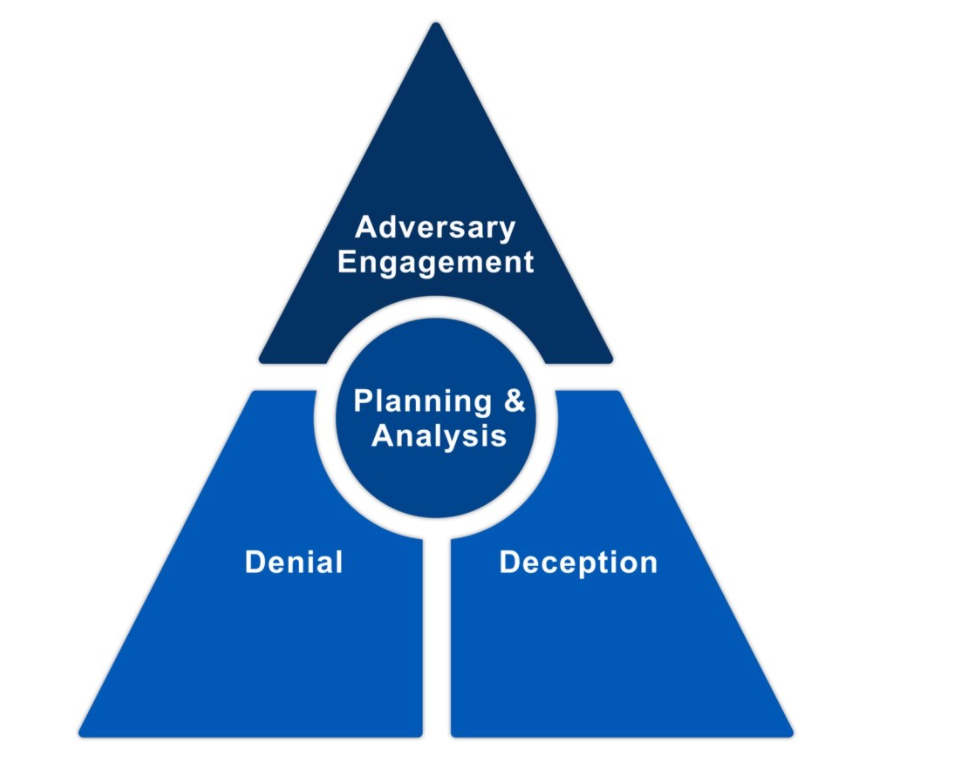

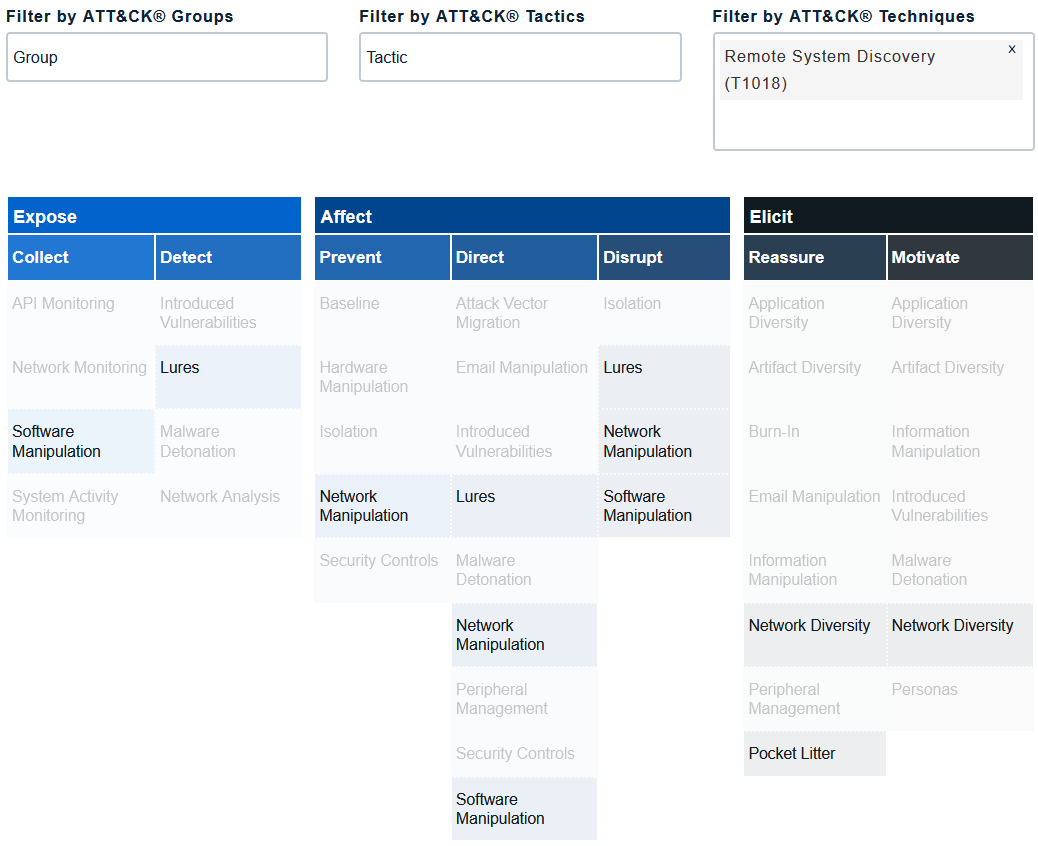

MITRE Engage (previously MITRE Shield) is a framework for planning and discussing adversary engagement operations.

Cyber defense has traditionally focussed on applying defence-in-depth to deny adversaries’ access to an organisation’s critical cyber assets. Increasingly, actively engaging adversaries proves to be more effective defence [MIT22b].

The foundation of adversary engagement, within the context of strategic planning and analysis, is cyber denial and cyber deception [MIT22b]:

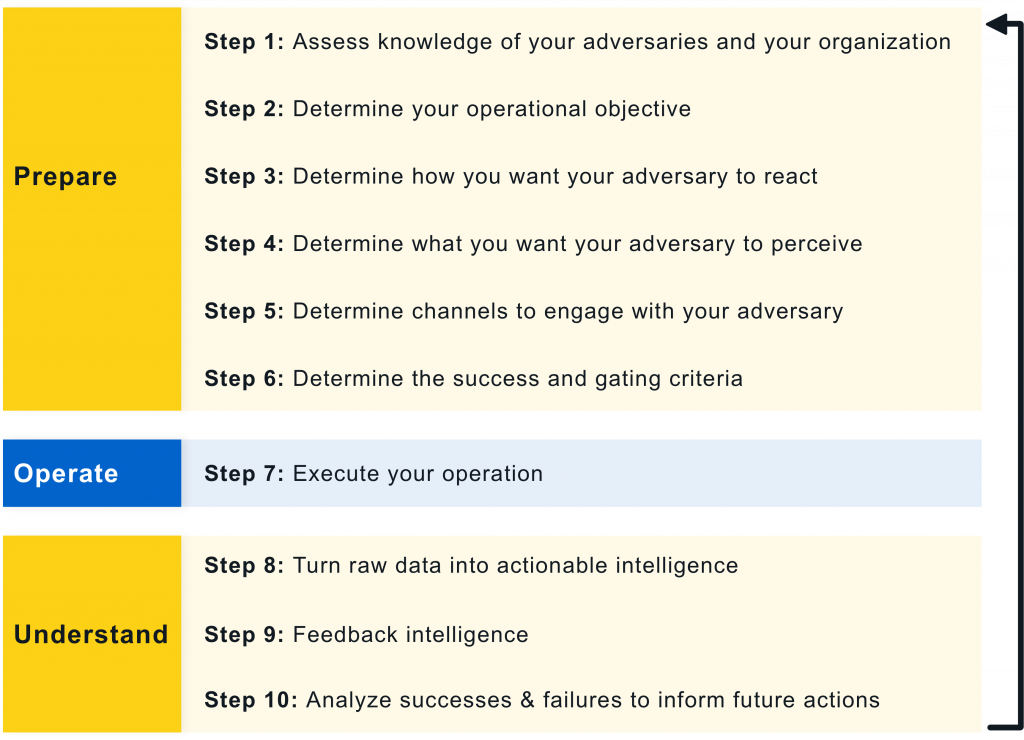

While MITRE Engage has not been around for long, the practice of cyber deception has a long history; honeypots for example can be traced back to the 1990s [Spi04, Ch. 3]. MITRE Engage prescribes the 10-Step Process, which was adapted from the process of deception in [RW13, Ch. 19], in Fig. 1: Prepare phase:

Operate phase:

Understand phase:

Example 1: The Tularosa Study

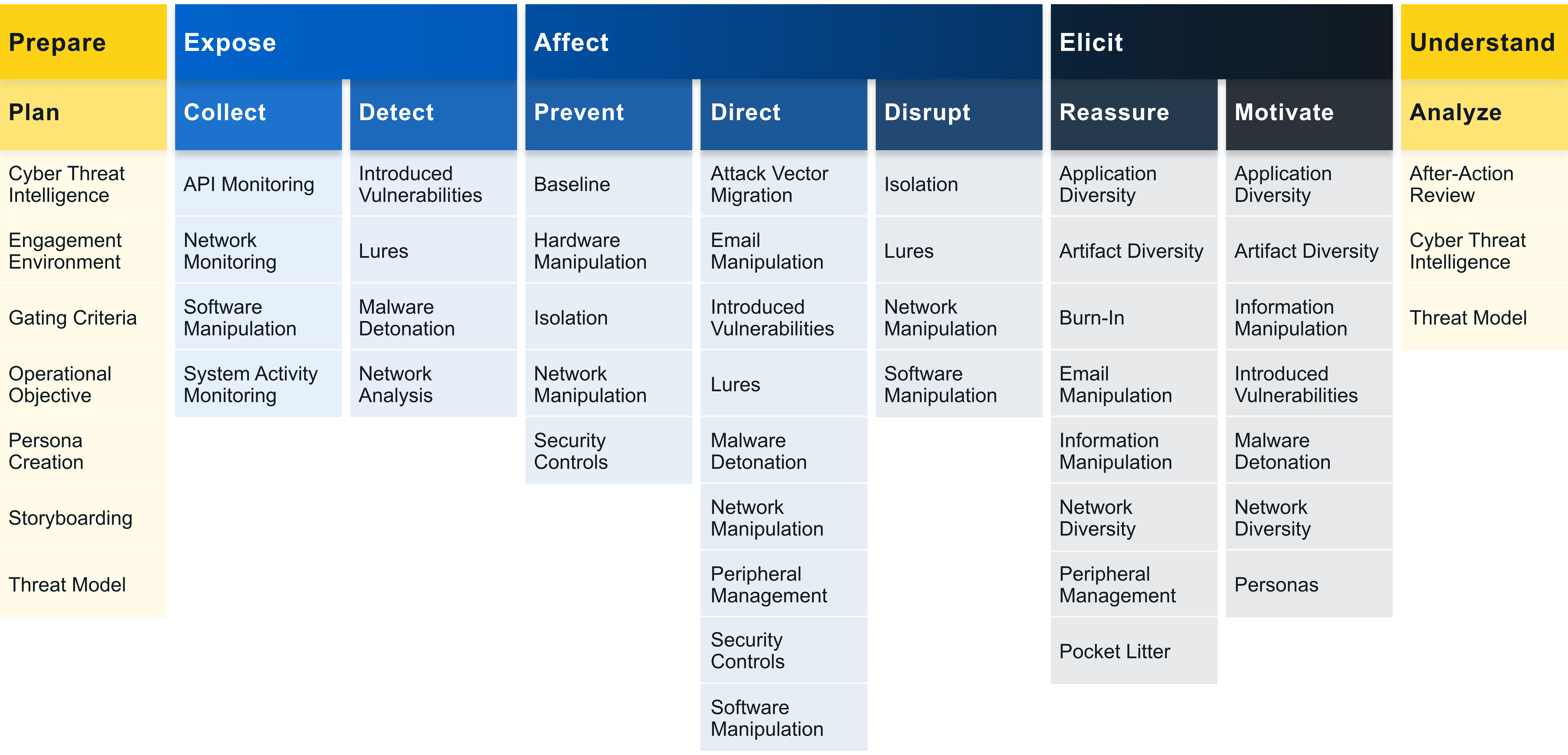

A starting point to practising cyber deception is to combine deception tools (e.g., honeypots and decoy content) with traditional defences (e.g., application programming interface monitoring, backup and recovery) [Heb22]. Contrary to intuition, cyber deception is more effective when adversaries know it is in place, because its presence exerts psychological impact on the adversaries [Heb22]. Supporting evidence is available from the 2018 Tularosa Study [FWSR+18]; watch presentation below: Operationalise the MethodologiesThe foundation of an adversary engagement strategy is the Engage Matrix: The Matrix serves a shared reference that bridges the gap between defenders and decision makers when discussing and planning denial, deception, and adversary engagement activities. The Matrix allows us to apply the theoretical 10-Step Process (see Fig. 1) to an actual operation. The top row identifies the goals: Prepare and Understand, as well as the objectives: Expose, Affect and Elicit.

The second row identifies the approaches, which let us make progress towards our selected goal. The remainder of the Matrix identifies the activities.

References

| ||||||||||||

Model checking | ||||||

|---|---|---|---|---|---|---|

Model checking is a method for formally verifying that a model satisfies a specified property [vJ11, p. 1255]. Model checking algorithms typically entail enumerating the program state space to determine if the desired properties hold. Example 1 [CDW04]

Developed by UC Berkeley, MOdelchecking Programs for Security properties (MOPS) is a static (compile-time) analysis tool, which given a program and a security property (expressed as a finite-state automaton), checks whether the program can violate the security property. The security properties that MOPS checks are temporal safety properties, i.e., properties requiring that programs perform certain security-relevant operations in certain orders. An example of a temporal security property is whether a setuid-root program drops root privileges before executing an untrusted program; see Fig. 1. References

| ||||||